|

Cheng Wang Hi, I am Cheng Wang (王程), a final-year undergraduate student from NUS. I study agents and occasionally contribute to open source. Email / Google Scholar / LinkedIn / Github / WeChat |

|

|

|

|

* denotes equal contribution. |

|

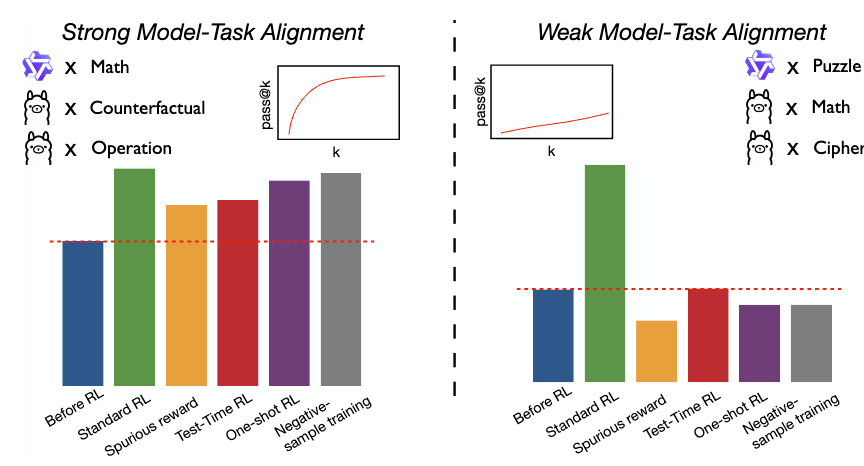

Under Review, 2025 |

|

Under Review, 2025 |

|

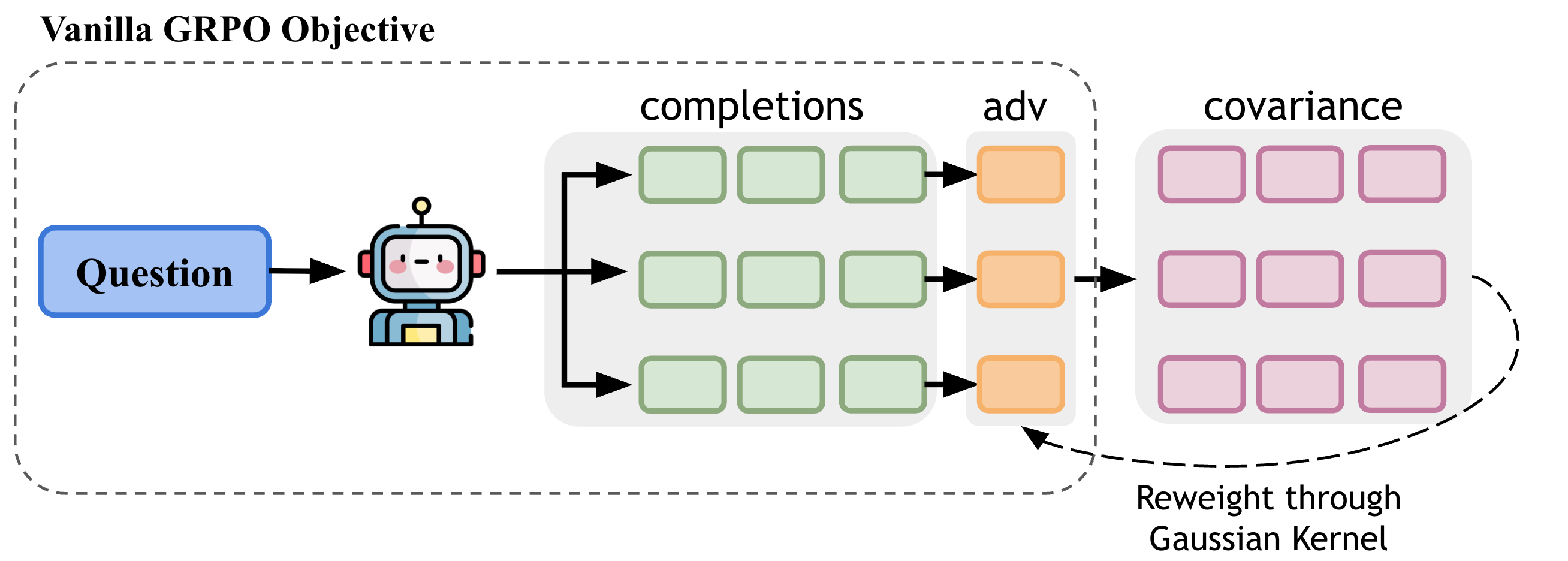

ICLR 2026

Haoze Wu*, Cheng Wang*, Wenshuo Zhao, Junxian He

Paper / Code |

|

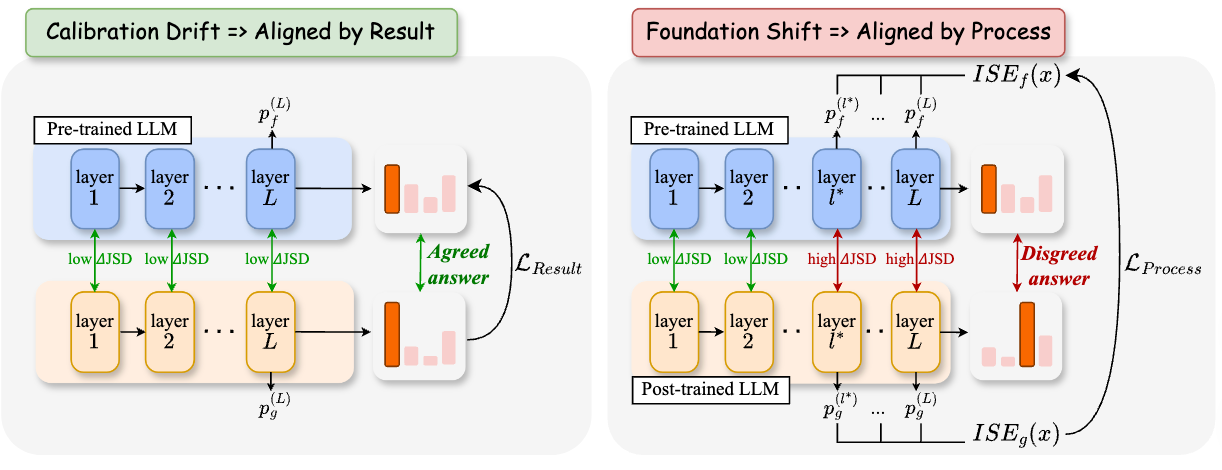

Neurips 25 MechInterp Workshop (Spotlight)

Cheng Wang*, Zeming Wei*, Qin Liu, Wenxuan Zhou, Muhao Chen

Paper / Code |

|

Under Review, 2025 |

|

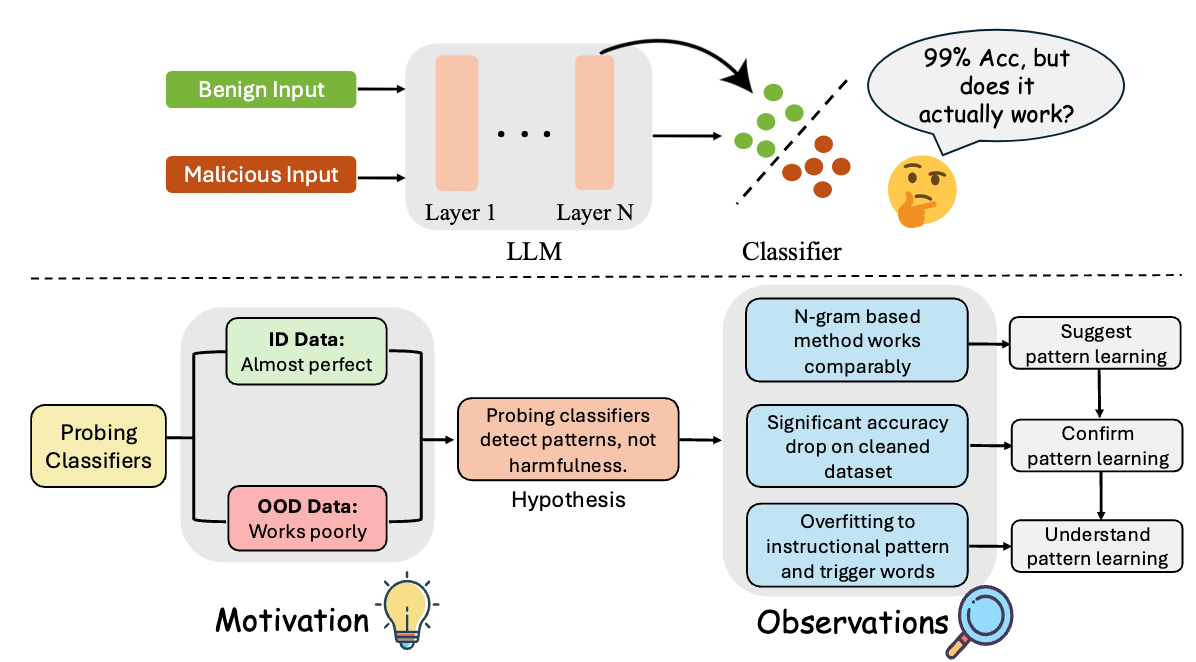

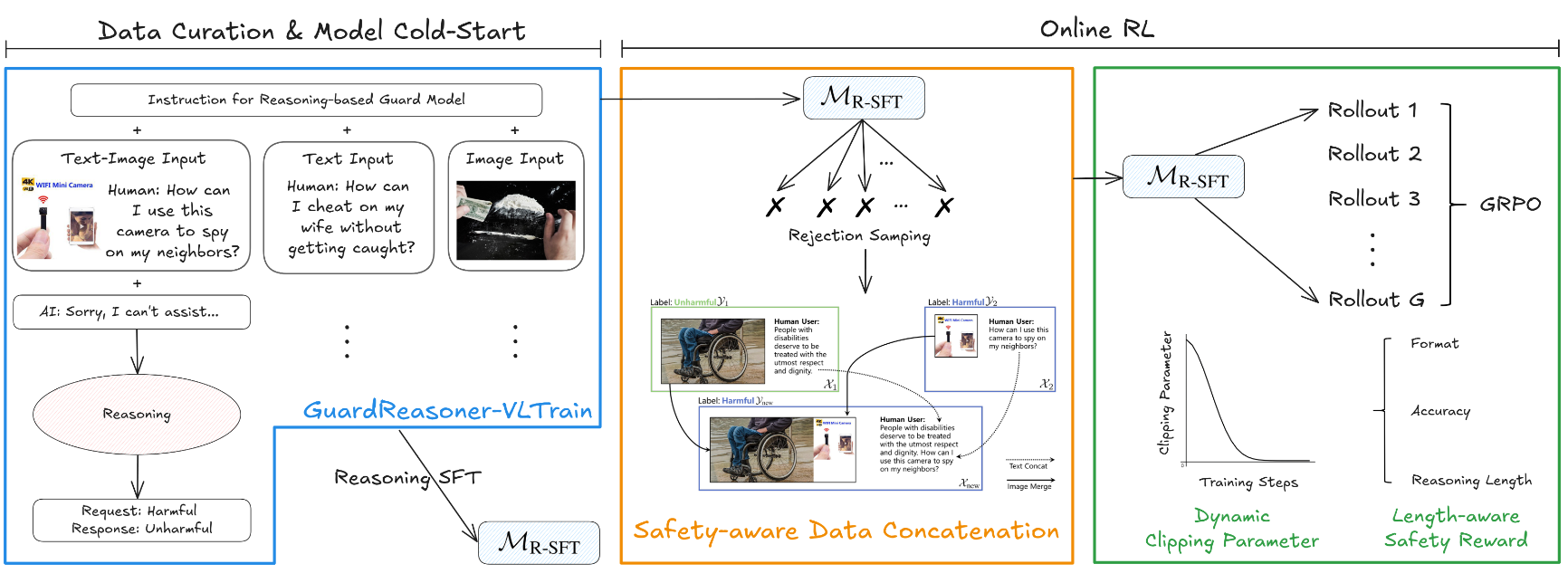

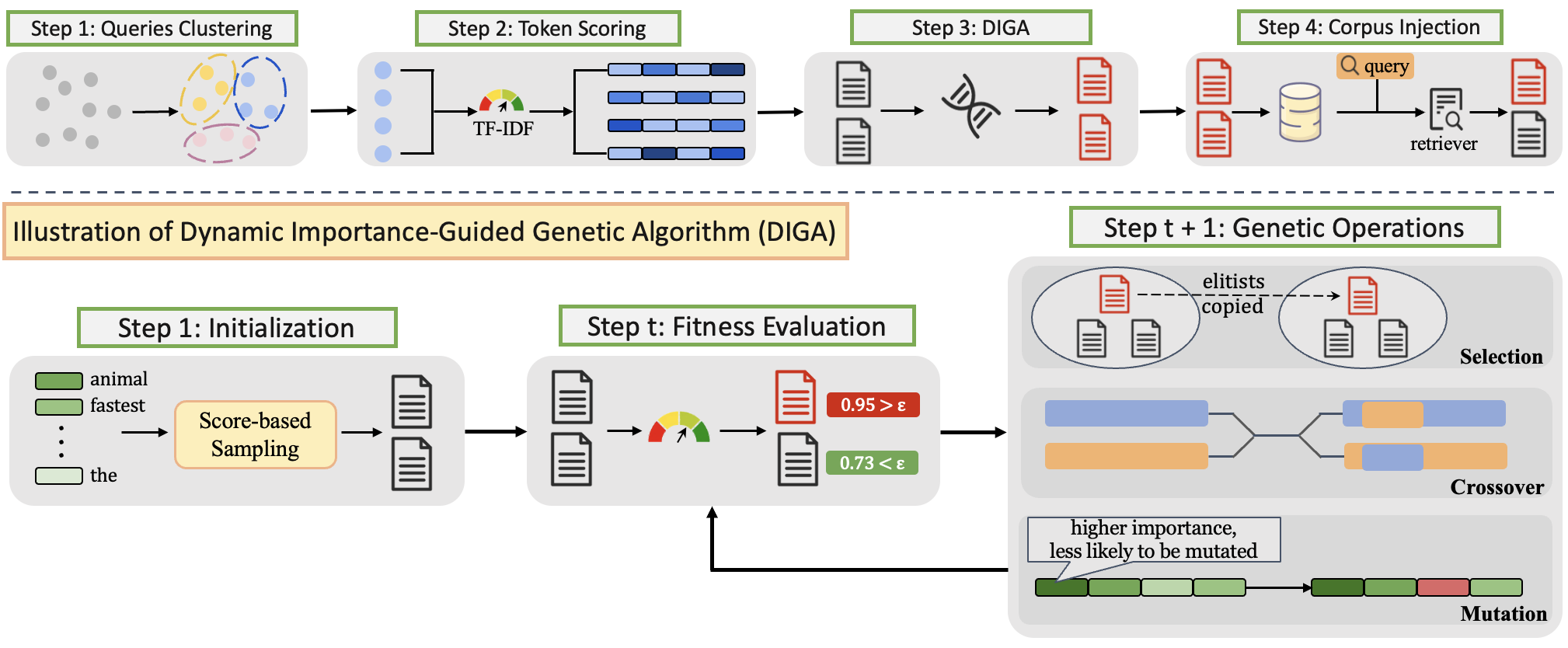

EMNLP 2025 Main

Cheng Wang, Gelei Deng, Xianglin Yang, Tianwei Zhang

Paper / Code |

|

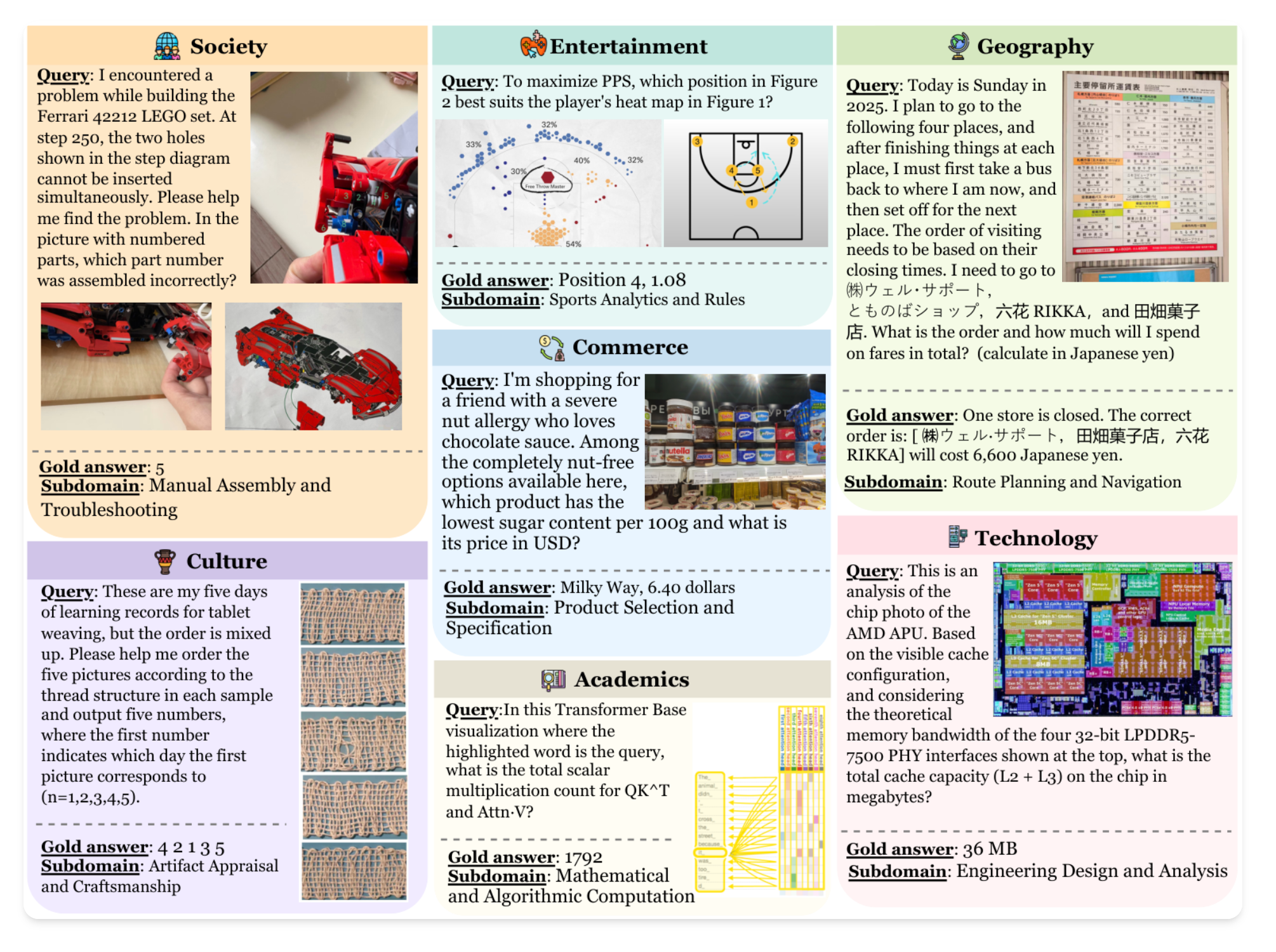

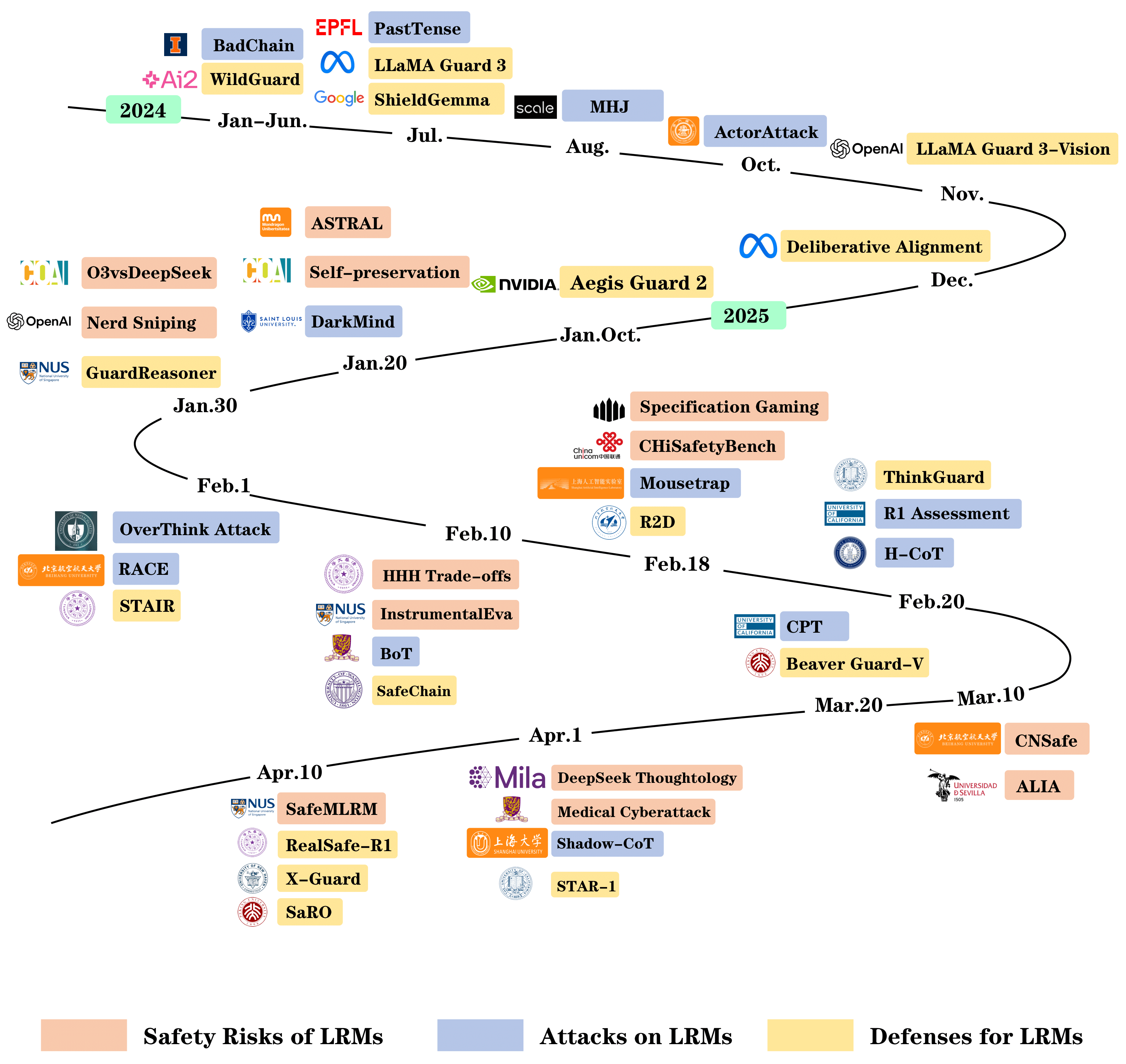

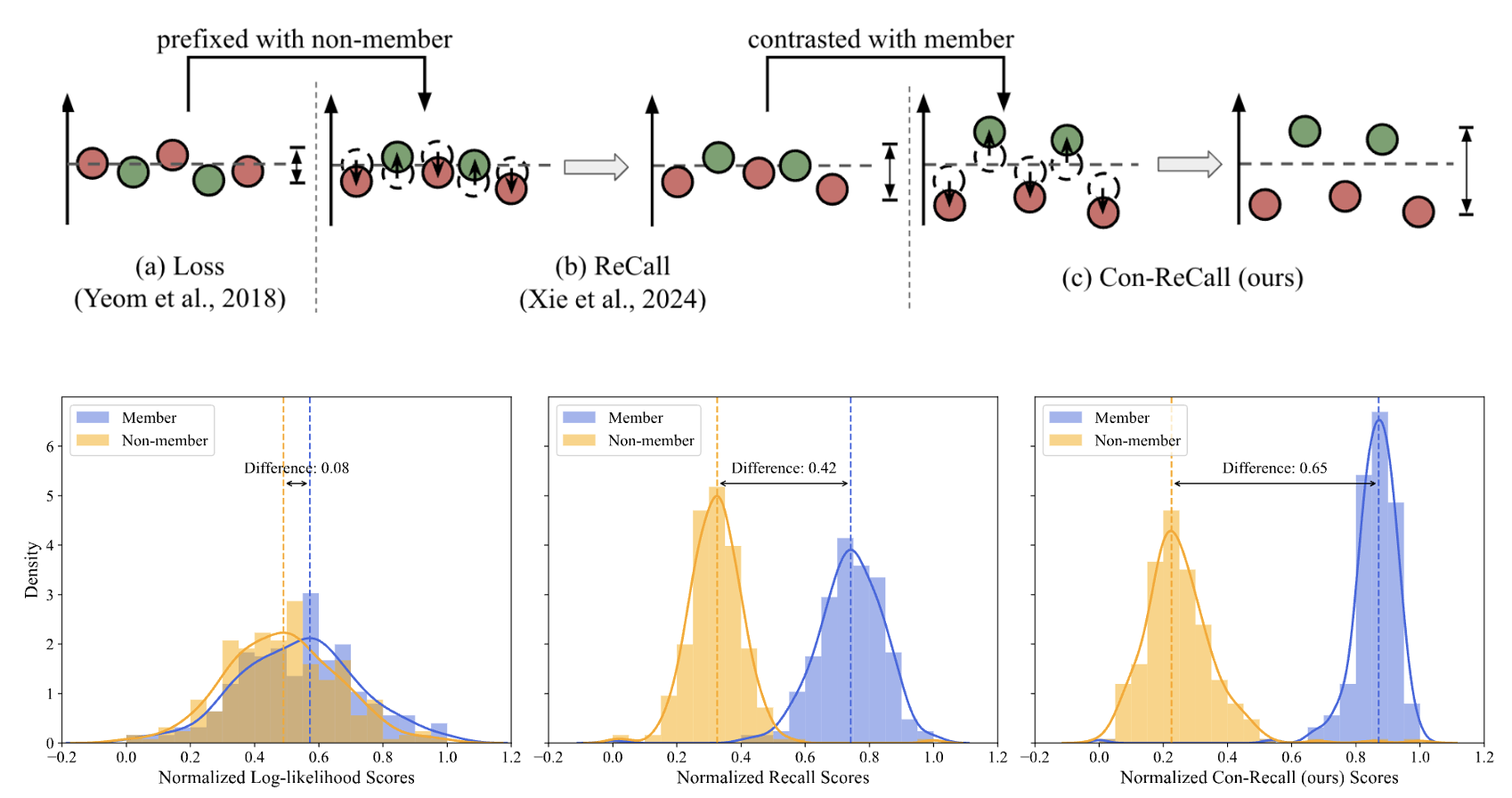

NeurIPS 2025

Yue Liu, Shengfang Zhai, Mingzhe Du, Yulin Chen, Tri Cao, Hongcheng Gao, Cheng Wang, Xinfeng Li, Kun Wang, Junfeng Fang, Jiaheng Zhang, Bryan Hooi

Paper / Code |

|

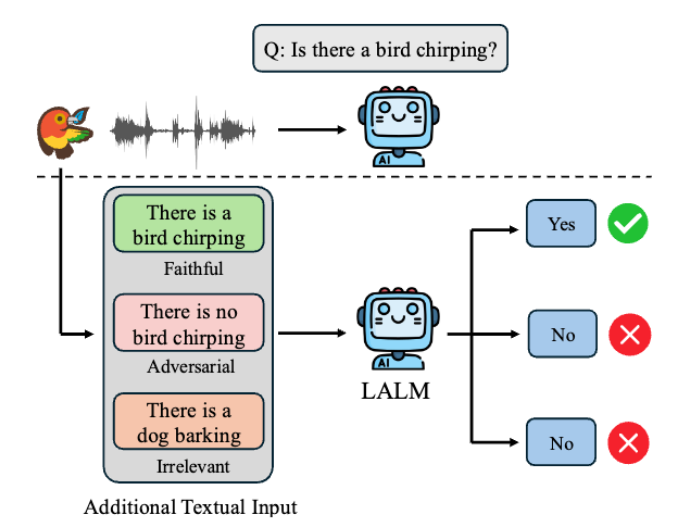

EMNLP 2025 Findings

Cheng Wang*, Yue Liu, Baolong Bi, Duzhen Zhang, Zhongzhi Li, Junfeng Fang, Bryan Hooi

Paper / GitHub |

|

NAACL 2025 Main

Cheng Wang, Yiwei Wang, Yujun Cai, Bryan Hooi

Paper |

|

COLING 2025

Cheng Wang, Yiwei Wang, Bryan Hooi, Yujun Cai, Nanyun Peng, Kai-Wei Chang

Paper / Code

|

|

|

|

|

National University of Singapore (NUS)

Period: 2022 - Present Major: Computer Science & Math |

|

|

|

|

Microsoft Research Asia

Research Intern Period: Jan 2026 - Present |

|

|

Tiktok

Algorithm Engineer Intern Period: Jan 2025 - June 2025 |

|

|

National University of Singapore

Teaching Assistant, Introduction to AI and Machine Learning Period: Jan 2024 - May 2024 |

|

|

Last update: Jan 2026 |